Suriya D Murthy

My personal website and portfolio

Project maintained by codesavory Hosted on GitHub Pages — Theme by mattgraham

IMAGEimate - An end-to-end pipeline to create realistic animatable 3D Avatars from a Single Image using Neural Networks

This work presents an end-to-end pipeline that coverts a single RGB image into realistic animatable 3D avatar which can be used to easily create virtual humans in VR and AR applications such as Adobe AERO. This project stiches together existing neural networks techniques to solve the subproblems in this domain - 1. Image to Mesh, 2. Mesh Rigging and Skinning and 3. applies them to re-pose meshes and apply animations.

Paper at VRST ’21 Conference

GitHub Code

Project Slides

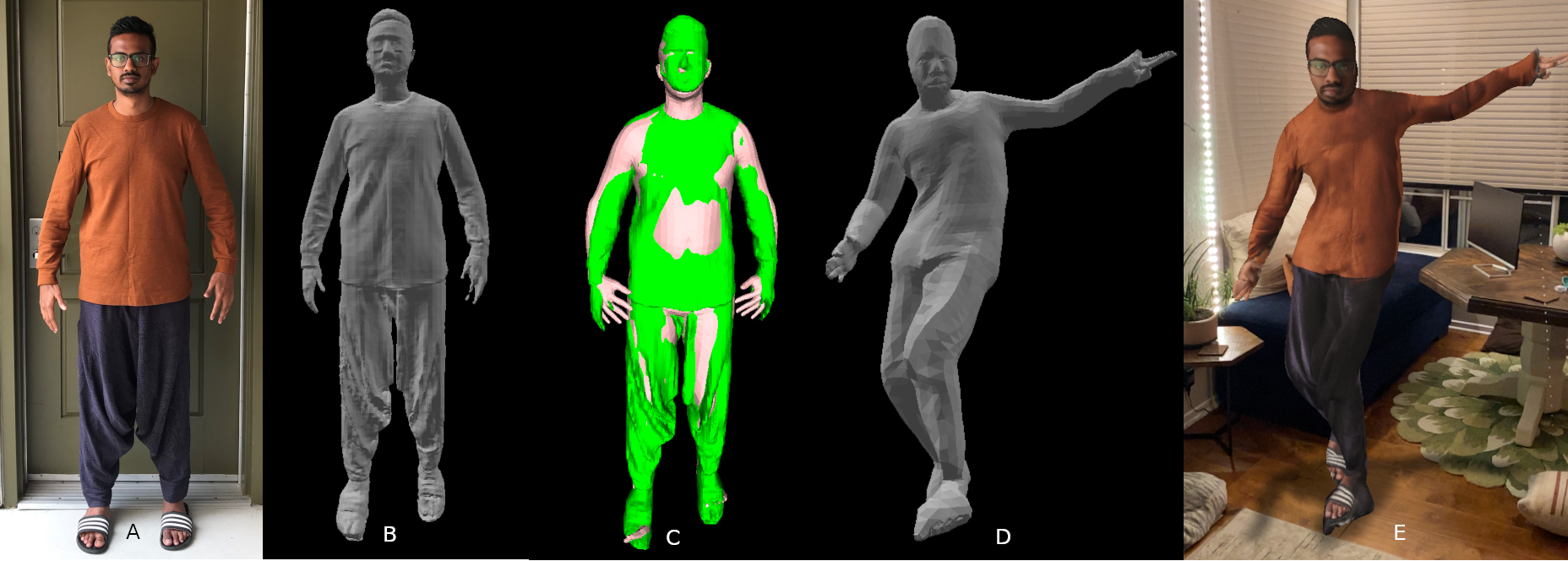

Showing the stages of IMAGEimate Pipeline

Results of each stage in the pipeline: A) Input RGB image

Results of each stage in the pipeline: A) Input RGB image

B) Predicted 3D mesh using PIFuHD1

C) Parametric model fit using IPNet2

D) Reposed mesh from AIST++3 Pose

E) Augmented using Adobe Aero and textured using Substance Painter

References

1: PIFuHD : Multi-Level Pixel-Aligned Implicit Function for High-Resolution 3D Human Digitization (CVPR 2020) - https://shunsukesaito.github.io/PIFuHD/

2: IPNet : Combining Implicit Function Learning and Parametric Models for 3D Human Reconstruction (ECCV 2020) - https://virtualhumans.mpi-inf.mpg.de/ipnet/

3: AIST++ : Learn to Dance with AIST++: Music Conditioned 3D Dance Generation (CVPR 2021)- https://google.github.io/aichoreographer/